Motivation

Are you a scientist that suffers from bursitis1? Do you loathe dealing with runtimes and infrastructure? Is your favorite calculus the $\lambda$-calculus? Do you have commitment issues with respect to your cloud provider? Well do I have an offering for you; presenting a High Performance Function(s) as a Service (HPFaaS) Python framework called funcX.

In all seriousness though; HPFaaS is a software development paradigm where the fundamental unit of computation is the function and everything else is abstracted away. Availing oneself of these abstractions enables one to benefit from data-compute locality2 and distribution to heterogeneous resources (such as GPUs, FPGAs, and ASICs). Another name for this kind of software is “serverless computing”; in the context of the kinds of workloads that scientists typically have, we call this “serverless supercomputing”.

Some example projects that use funcX are:

- Synchrotron Serial Crystallography is a method for imaging small crystal samples 1–2 orders of magnitude faster than other methods; using funcX SSX researchers were able to discover a new structure related to COVID

- DLHub uses funcX to support the publication and serving of ML models for on-demand inference for scientific use cases

- Large distributed file systems produce new metadata at high rates; Xtract uses funcX to extract metadata colocated with the data rather than by aggregating centrally

- Real-time High-energy Physics analysis using Coffea and funcX can accelerate studies of decays such as H$\rightarrow$bb

But what is funcX?

funcX works by deploying the funcX endpoint agent on an arbitrary computer, registering a funcX function with a centralized registry, and then calling the function using either the Python SDK or a REST API. So that we can get to the fun stuff quickly we defer discussion of deploying a funcX endpoint until the next section and make use of the tutorial endpoint.

To declare a funcX function you just define a conventional Python function like so

def funcx_sum(items):

return sum(items)

et voila! To register the function with the centralized funcX function registry service we simply call register_function:

from funcx.sdk.client import FuncXClient

fxc = FuncXClient()

func_uuid = fxc.register_function(

funcx_sum,

description="A summation function"

)

The func_uuid is then used to call the function on an endpoint; using the tutorial endpoint_uuid:

endpoint_uuid = '4b116d3c-1703-4f8f-9f6f-39921e5864df'

items = [1, 2, 3, 4, 5]

res = fxc.run(

items,

endpoint_id=endpoint_uuid,

function_id=func_uuid

)

fxc.get_result(res)

>>> 15

And that’s all there is to it! The only caveat (owing to how funcX serializes functions) is that all libraries/packages used in the function need to be imported within the body of the function, e.g.

def funcx_sum_2(items):

from numpy import sum

return sum(items)

Deploying a funcX endpoint

An endpoint is a persistent service launched by the user on their compute system that serves as a manager for routing requests and executing functions on that compute system. Deploying a funcX endpoint is eminently straightforward. The endpoint can be configured to connect to the funcX webservice at funcx.org. Once the endpoint is registered, you can invoke functions to be executed on it.

You can pip install funcx to get the funcX package onto your system.

Having done this, initiating funcX will ask you to authenticate with Globus Auth:

$ funcx-endpoint init

Please paste the following URL in a browser:

https://auth.globus.org/v2/oauth2/authorize?client_id=....

Please Paste your Auth Code Below:

funcX requires authentication in order to associate endpoints with users and enforce authentication and access control on the endpoint.

Creating, starting, and stopping the endpoint is as simple as

funcx-endpoint configure <ENDPOINT_NAME>

and

funcx-endpoint start <ENDPOINT_NAME>

and

funcx-endpoint stop <ENDPOINT_NAME>

How to set configuration parameters and other details are available in the documentation but there’s not much more to it than that.

You can deploy endpoints anywhere that you can run pip install funcx.

Architecture and Implementation

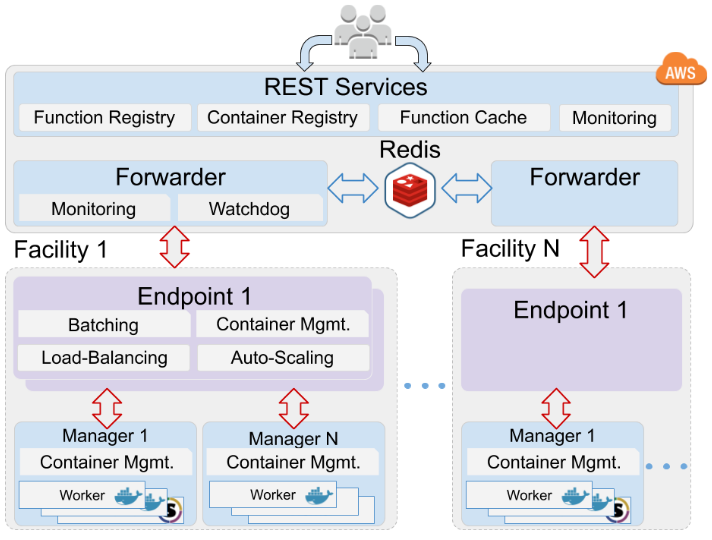

funcX consists of endpoints and a registry that publishes endpoints and registered functions:

Each endpoint runs a daemon that spawns managers that themselves orchestrate a pool of workers that run funcX functions within containers3:

The endpoint also implements fault tolerance facilities using a watch dog process and heartbeats from the managers.

Communication between the funcX service, the endpoints, and the managers is all over ZeroMQ. For all of the misers4 in the audience, funcX implements all of the standard optimization strategies to make execution more efficient with respect to latency and compute (memoization, container warming, request batching). For the paranoiacs4 in the audience, funcX authenticates and authorizes registering and calling functions using Globus Auth and sandboxes functions using containerization and file system namespacing therein. More details (along with performance metrics and comparisons with commercial competitors) are available in the funcX paper.

Conclusion

funcX is for scientists that have compute needs that fluctuate dramatically in time and resource requirements. The project is open source (available on GitHub) and provides a binder instance that you can immediately experiment with. If you have any questions or you’re interested in contributing feel free to reach out to the project or myself directly!